Sophisticated trading firms active in listed derivatives markets understand the importance of being able to efficiently manage data across multiple clearing brokers commonly known as FCMs or Futures Commission Merchants. In an increasingly data-dependent world, where change is the only constant, these firms must develop and continuously reevaluate their data management processes. Having worked with a wide range of trading firms including hedge funds, energy companies, commodities merchants, and institutional investors, we have come across and solved many challenges related to managing data across multiple clearing brokers, some of which we present in this short research note.

Start with the End

Whether a firm is already working with multiple FCMs or just about to start trading listed derivatives, it’s always a good time to begin creating a more resilient and flexible data infrastructure. Lack of uniformity in data format and symbology are great places to start with:

Build Processes that are Independent of Data Formats

Rarely are two clearing brokers supplying data in the same format. Rather than trying to build a rigid data pipeline, trading firms should develop flexible processes to allow them to ingest data from any FCM seamlessly and without additional investments in developer time and maintenance.

Accept that Derivatives Markets Lack a Universal Symbology

Traders and portfolio managers know exactly the symbols of the products that they trade, but these symbols often don’t carry over to post-trade processes. As a result, they often find themselves reviewing reports and data from their service providers that don’t resemble what they have traded. Even worse, the same futures contract executed with different brokers might have different symbols and names. It’s important that trading firms implement processes that allow them to use a consistent symbology from front to back.

Problems Will Happen

Broker data is seldom perfect and it’s safe to assume that it will be incomplete, inaccurate, and late at some point. To hedge against these inefficiencies, trading firms should develop robust processes to handle these issues and deploy early warning systems that help them identify and remediate problems quickly. Additionally, trading firms should prepare for wide disparities in both delivery times and order of data which can change daily.

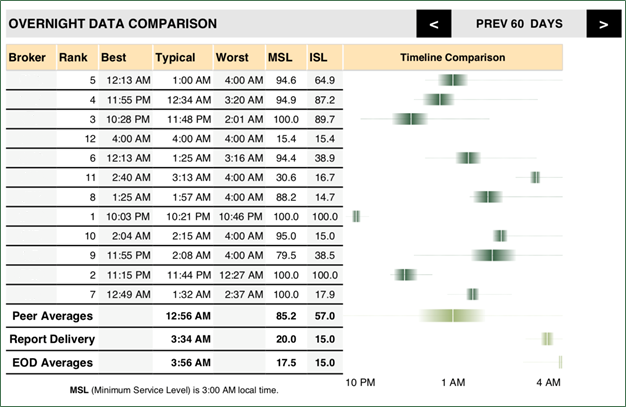

Our proprietary analysis, based on years of daily observations, demonstrates how FCMs, big and small, are not immune to challenges in post-trade data delivery regardless of their technology budgets.

Figure 1: Comparison of Overnight Data Transmission by Broker

According to the worst-case data from the chart above, you can expect at least one clearing broker to experience significant delays or issues in delivering post-trade data on any given day. Trading firms should also track the quality and timeliness of their clearing brokers’ data reporting services. To prepare for the inevitable, trading firms should take the following steps:

- Perform due diligence on their FCMs’ data delivery capabilities

- Verify that data received from each FCM matches internal records

- Evaluate their FCMs’ ability to re-run data to better prepare for potential issues

Data is a Major Dependency

Intuitively, there’s no question that data is the lifeblood of post-trade operations. Therefore, trading firms need to identify which key functions are specifically dependent on the aggregation of data across multiple clearing brokers. While not exhaustive, the following post-trade functions are both operationally critical and heavily dependent on the timely delivery of data from FCMs:

- Reconciliation of trades and positions

- Reporting and analytics

- Alerting on compliance and business rules

- Post-trade risk analytics

Crowdsourcing Offers Significant Benefits

Each organization is unique in how it consumes and leverages data. Underneath that uniqueness is a common need for accuracy, a need that can be aided by the smart application of crowdsourcing.

New Symbols and Products Recognition

Keeping in mind that clearing brokers seldom use universal symbology or deliver consistent data, trading firms should consider using multi-broker aggregation systems that manage these differences instead of trying to avoid the unavoidable.

For example, it’s quite common to wrongly treat a newly encountered symbol in the post-trade data of a clearing broker as a new product. However, an effective crowdsourcing system would instead analyze the data that comes along with the new symbol, such as price ranges and maturity characteristics, to see if this new symbol is, in fact, an existing product that was cleared for the first time with a given FCMs.

Price Outliers

Crowdsourcing can also be very effective to control exchange-cleared data accuracy that is cheaper and easier to deploy than using vendor-provided reconciliations. By comparing closing prices, decimal alignments, and other data received from clearing brokers against each other or the trading history, many problems like bad valuations and out-of-range foreign exchange rates can be identified.

Reconciliation is evidently a critical component of risk management. Quite often, this process is inefficiently implemented resulting in time spent reconciling the reconciliation process instead of reconciling trading data. For instance, false positives can occur from cross-references not configured properly in the reconciliation process.

Referential Data

Compared to a single FCM infrastructure, using multiple clearing brokers poses several challenges for trading firms, but crowdsourcing can streamline the development and maintenance of a reliable referential database.

Conclusion

There is no denying the safety and increased services associated with FCM diversification, but it also introduces operational challenges that can create pain points even for the most sophisticated trading firms. The lack of consistent data and universal symbology within listed derivatives markets demands processes independent of clearing brokers’ data formats. Trading firms should deploy early warning systems that identify discrepancies in data received from FCMs and develop processes to remedy them. It is imperative for trading firms to identify and understand which of its post-trade functions are dependent on clearing data aggregation. From issues related to referential data, product recognition or price outliers, crowdsourcing can help streamline the development and maintenance of reliable trading datasets. Having a cohesive approach to data management that is flexible, that plans for failures, and that works seamlessly across clearing brokers will serve trading firms well both now and in the future.

Here at Thalēs, we have deep experience in helping solve many challenges related to managing data across multiple FCMs. If you are a fund manager, commodity merchant, or institutional investor and want to learn more about our innovative technology and services, contact us today.

About the Authors

Tim O’Shea is the President and COO of Thalēs Trading Solutions LLC, a technology-driven derivatives brokerage and capital raising firm servicing institutional market participants.

Paul Geiger is the President and Co-founder of Theorem Technologies, a post-trade data management portal and risk-type reporting solution built for Brokers, Hedge Funds, Investors and Traders.

The views expressed above are not necessarily the views of Thalēs Trading Solutions LLC or any of its affiliates (collectively, “Thalēs”). The information presented above is only for informational and educational purposes and is not an offer to sell or the solicitation of an offer to buy any securities or other instruments. Additionally, the above information is not intended to provide, and should not be relied upon for investment, accounting, legal or tax advice. Thalēs makes no representations, express or implied, regarding the accuracy or completeness of this information, and the reader accepts all risks in relying on the above information for any purpose whatsoever.